The Role of AGI in Cybernetic Immortality

Ben Goertzel, Ph.D.

Page 7 of 7

What makes Novamente different from other approaches to AGI? The real answer to that gets deep into the details. But on a more philosophical level, one thing I think makes the Novamente approach unique is that we pay more attention to the emergent structures of intelligence -- these things like self, free will, reflective awareness, and so on. I spent a lot of time trying to understand on a theoretical basis how these things can emerge from the lower level learning and knowledge representation infrastructure of Novamente. And I feel like most people have not taken that kind of approach.

There are AI systems that are based on logic -- logical reasoning – and don't pay much attention to self-organization, and emergence and complexity. There are approaches based on neural and evolutionary learning, which are great but don’t deal with language and abstract reasoning hardly all. And there doesn't seem to be much understanding of how to make abstract reasoning emerge from these low-level structures.

Then there are integrated systems that integrate various modules together -- but in a kind of a plug and play way that doesn't give much thought to how they interoperate to produce emergent structured intelligence. I think it’s really necessary to think about these high level structures: identity, self-awareness, long-term memory and how does it self organize? How to get these high-level properties of mind to emerge out of computer science and infrastructure is not obvious; and has been mostly what I’ve thought about over the last couple decades.

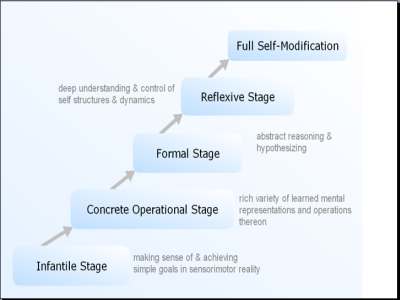

Getting back to the simulation world and teaching baby AI’s: In terms of learning, I think a lot of Piaget’s general framework, although obviously many of the details of his thinking need to be updated in accordance with the recent understanding of developmental psychology. I think about Novamente’s progress in terms of developmental stages much like Piaget’s. You can talk about an infantile stage, and then a concrete operational stage where we have a richer variety of mental representations and operations; a formal stage, where you can do abstract reasoning and hypothesis; and finally, the reflective stages and full-self understanding.

Piaget mostly talks about the first three stages. Later psychologists talk about post-formal thinking, involving deep reflection on the foundations of self and thought. AI can go even further than that; it can completely modify its own mind.

We are still at the infantile stage with Novamante. I think it is important to ascend that ladder methodically and to be sure the system has really mastered each stage before you go any further.

Image 9: Novamente Stages

The details of the Novamente architecture and how it represents knowledge shows the different parts of the system: short-term, long term memory, reasoning, learning, perception, and so on. It’s a fairly complex system using cutting edge computer science, logic, evolutionary learning, and probability theory; using these things in a way to give rise to the emergent structures, of self, identity, and so forth.

The one point in terms of the AI architecture that I do want to harp on is the process I call map formation. What that means is as follows. Suppose you have a number of things in the system’s memory -- say the nodes in the system’s memory representing a bunch of related things like cat, mouse, tail, furry. Basically cat related stuff. Then there is a cognitive process in the Novamente system called map encapsulation, by which the system recognizes, “Hey, all these guys are used together. So let’s make it a single concept to group all them together.” Then a “cat-related” node would be created by the map encapsulation process, and could then enter into further reasoning.

This kind of process is a system recognizing patterns in what it does, and then embodying these patterns concretely and storing them explicitly within its own memory. It relates to the idea I raised in the comments to someone else’s talk earlier, about a system taking its own implicit goals (what it is acting like its doing), and embodying those explicitly as in explicit goals (what it thinks it’s doing). It also relates to the self. We recognize patterns of what we actually are, sometimes accurately, sometimes erroneously. We embody them as an explicit model of what we are. This process of recognizing patterns in yourself and embodying them explicitly and symbolically, gives rise to new patterns; this feedback is an important thing. I think if you get that feedback to work in an AI system you can get reflective awareness to work.

I think we’re about one year away – if we get a bit more funding – from the creation of a fully functional, artificial infant.

Image 10: Goal For Year One After Project Funding

Once we get that, we can work toward ascending the next step of the ladder. I think the proper goal is to make an artificial baby in the simulation world, and I estimate we are about seven to ten man-years of programming and testing away from that. And then on to the next level in the Piagetan ladder.

I’m not saying that it’s a trivial thing, it’s hard. I’m just saying it’s a palpable thing – it’s a series of concrete, well charted set of steps. I think this is a little different than AI through brain emulation, which is relying on a whole bunch of unknown stuff about mapping the brain, mind uploading, nanotechnology, etc.

If this kind of research program, either by me or others, is successful it will give us a lot of things. It will give us some amazing technologies, and a path to superhuman AGI. And it will also, like I mentioned before, give us a way to experiment with notions of identity, immortality, and self-modification.

A quick thanks to Bruce Klein, who helps me run Novamente, and to the excellent scientists and engineers who work with me on Novamente, helping me to try to bring the baby minds to life.

Ben Goertzel, Ph.D,

CEO/CSO Novamente LLC

Involved in AI research and application since the late 80’s. Former CTO of 120+ employee, thinking machine company, Webmind. PhD in mathematics from Temple University. Held several university positions in mathematics, computer science, and psychology, in the US, New Zealand and Australia. Author of 70+ research papers, journalistic articles and five scholarly books dealing with topics in cognitive sciences and futurism. Principle architect of the Novamente Cognition Engine.